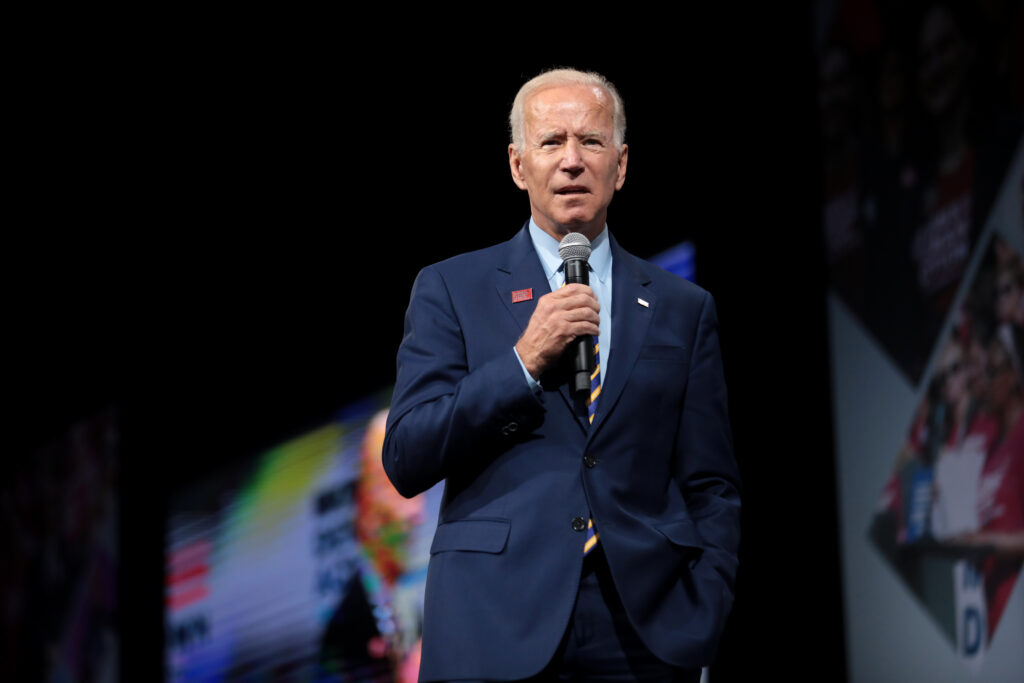

President Biden Issued a Set of Executive Orders To Make AI Safer

It turns out that trying to regulate Artificial Intelligence is a frustrating quest for humans.

Joe Biden stepped this week where Congress and other regulators have not dared to tread, issuing a set of executive orders towards making AI a drop safer.

Of course, he did so while courting Big Tech on the one hand, who took part in forming the executive order, and while issuing a slap to Congress for lots of talk but no action on the issue.

It was a foray into balancing U.S. incentives for innovation while addressing concerns that the emergent technologies we lump together as artificial intelligence could prove dangerous as a tool for exacerbating bias, displacing workers and undermining our politics and national security.

At heart, the government wants Big Tech to set up more formal safety processes, for development “red teams” to challenge each new technological development across companies, share information about what seems dangerous, and for its own agencies, to mitigate the technology’s risks even while exploring how best to accommodate AI in their own policies and procedures. Not exactly real restrictions.

The companies got Biden to also support changes in immigration laws, making it easier to attract international talent in these tech areas.

In the end, of course, the White House is acting because Congress is not — in either defining and regulating technology or in looking at immigration policies. Though Senate Majority Leader Chuck Schumer has vowed bipartisan study and efforts towards regulation, no one is acting as if this is a priority.

Sharing Safety Information

According to The Washington Post, tech companies would be tasked with reporting regularly to the government on the risks posed by artificial intelligence. Just how and what that means is not transparent, of course, but the importance seems to be that there is an attempt at all to bring order to the tech wild west.

Biden relied on the 1950 Defense Production to require companies to share internal “red-teaming” studies that involve issues with the potential to impact people’s rights or safety, with various agencies told to monitor and evaluate deployed AI for disruption.

Remember, this order is coming from the same government that can’t figure out how to regulate Facebook, Twitter/X and other social media giants about postings that run afoul of common sense on misinformation — or that addict the young in ways that we increasingly find troublesome and dangerous.

Businesses and tech developers are moving fast to consider AI possibilities, in ways to rethink production and labor costs. Medical and scientific researchers are pouncing on the possibilities for speeding medical and pharmaceutical advances. Partisan political advocates are looking at ways alternately to attack or defend their candidates. Nations with ill at heart are exploring how to use artificial intelligence to enflame conflict among their enemies and promote their own causes.

The Big Tech companies have been active in lobbying some kinds of regulation — presuming, of course, that they have a hand in setting it — just to bring about some standards that will prove legal bulwarks in the very near future. Over the summer, the administration said it was gathering companies like OpenAI and Google to make offer advice towards voluntary commitments to public safety.

What has become painfully obvious is that the developers of the fastest artificial learning machines don’t fully understand how exactly the learning happens or how far robotics will go.

In short, governments are struggling to ensure both that robots don’t end up directing humans, and that our own inventors will have an upper hand at innovation over equally motivated inventors in other countries.

There’s a tech development war under way with no rules.

The Rest of the World

While regulation in the United States has lagged, other countries are moving ahead on efforts to regulate advanced AI systems. By the end of year, the European Union is expected to have an AI Act, a wide-ranging package that aims to protect consumers from potentially dangerous applications of AI. China has boosted the growth AI tools while retaining a government grip on what information the systems make available to the public.

Vice President Kamala Harris will be at a British AI summit this week, where global leaders want to discuss the riskiest technology applications. Britain is more focused on guaranteeing human controls over robots than on regulating developers.

Meanwhile, most of our own most vocal congressmen, constantly spin over the perception that partisan controls about misinformation on Facebook reflect political persecution against free speech or that the governing Chinese Communist Party is seeking to control all information outlets in the United States for its own political purposes.

Congress can’t even figure out whether it is concerned about privacy, online safety, the effect on jobs, scientific advance or national security.

The question at hand as we see increasingly rapid adaptation of artificial intelligence is whether there are any guardrails at all for a set of technologies we hardly understand. As it stands, the Biden effort is short of congressional action, but it is the only effort we have produced to date.

Then again, government officials are only human.